DistributedDataParallel non-floating point dtype parameter with

4.8 (247) In stock

4.8 (247) In stock

🐛 Bug Using DistributedDataParallel on a model that has at-least one non-floating point dtype parameter with requires_grad=False with a WORLD_SIZE <= nGPUs/2 on the machine results in an error "Only Tensors of floating point dtype can re

Distributed PyTorch Modelling, Model Optimization, and Deployment

55.4 [Train.py] Designing the input and the output pipelines - EN - Deep Learning Bible - 4. Object Detection - Eng.

Optimizing model performance, Cibin John Joseph

Access Authenticated Using a Token_ModelArts_Model Inference_Deploying an AI Application as a Service_Deploying AI Applications as Real-Time Services_Accessing Real-Time Services_Authentication Mode

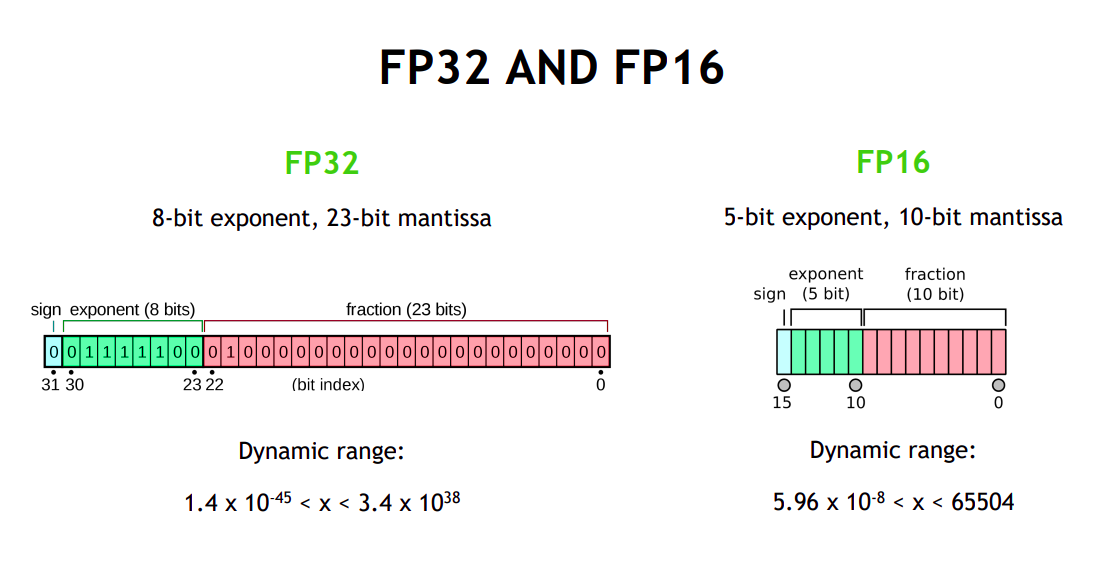

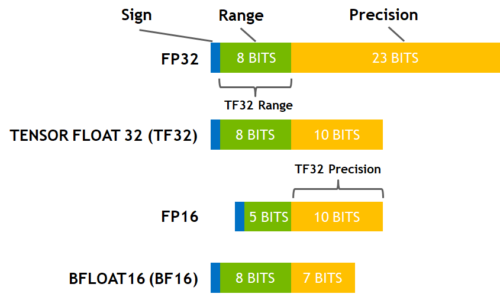

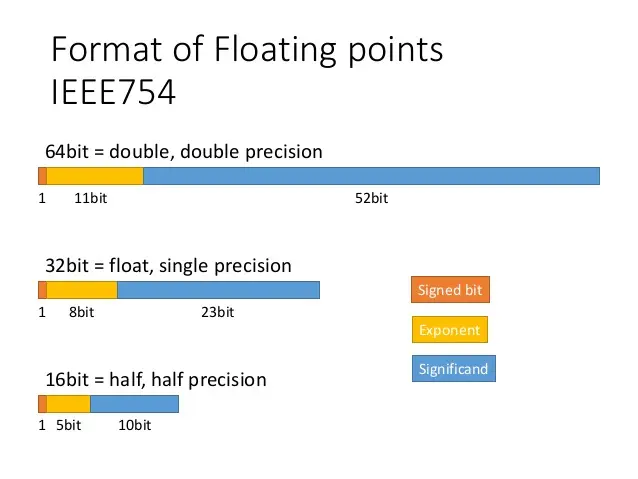

Performance and Scalability: How To Fit a Bigger Model and Train It Faster

Sharded Data Parallel FairScale documentation

A comprehensive guide of Distributed Data Parallel (DDP), by François Porcher

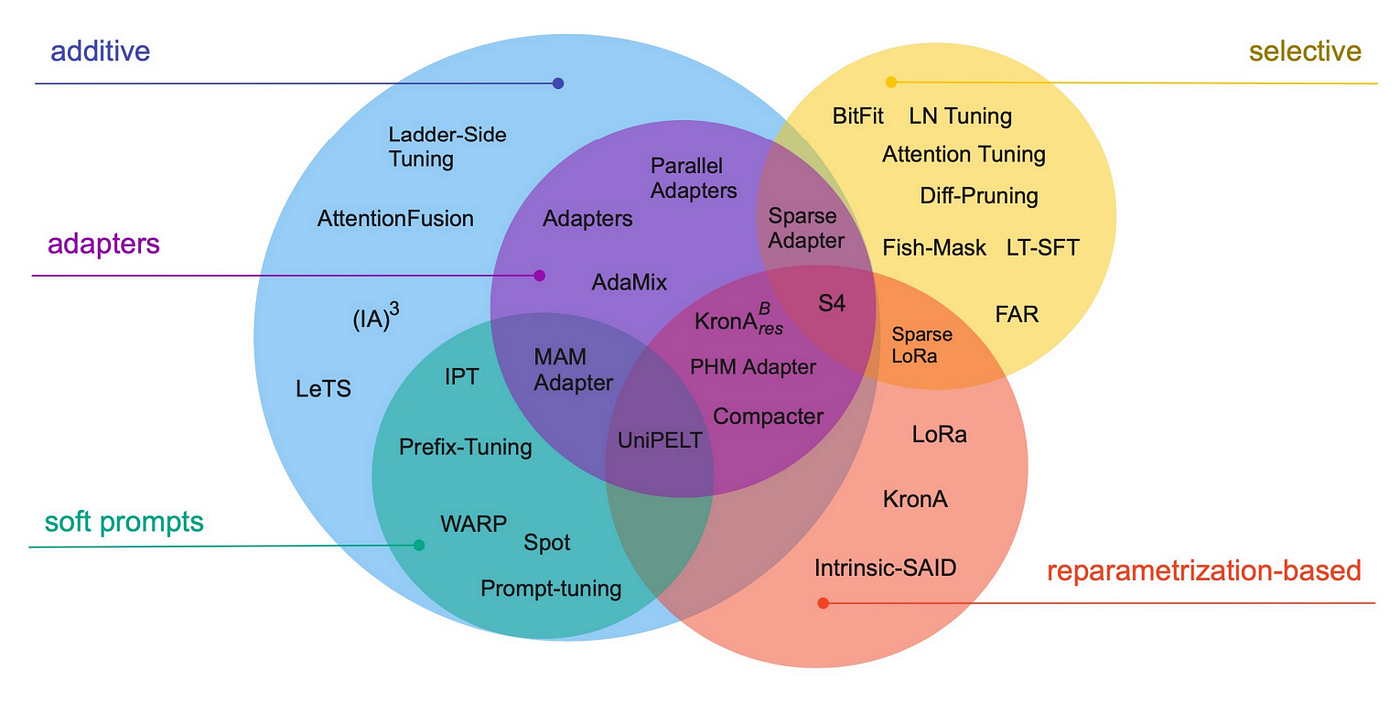

Aman's AI Journal • Primers • Model Compression

Finetune LLMs on your own consumer hardware using tools from PyTorch and Hugging Face ecosystem

PyTorch Numeric Suite Tutorial — PyTorch Tutorials 2.2.1+cu121 documentation