What's in the RedPajama-Data-1T LLM training set

4.5 (777) In stock

4.5 (777) In stock

RedPajama is “a project to create leading open-source models, starts by reproducing LLaMA training dataset of over 1.2 trillion tokens”. It’s a collaboration between Together, Ontocord.ai, ETH DS3Lab, Stanford CRFM, …

65-Billion-Parameter Large Model Pretraining Accelerated by 38

What's in the RedPajama-Data-1T LLM training set

LaMDA to Red Pajama: How AI's Future Just Got More Exciting!

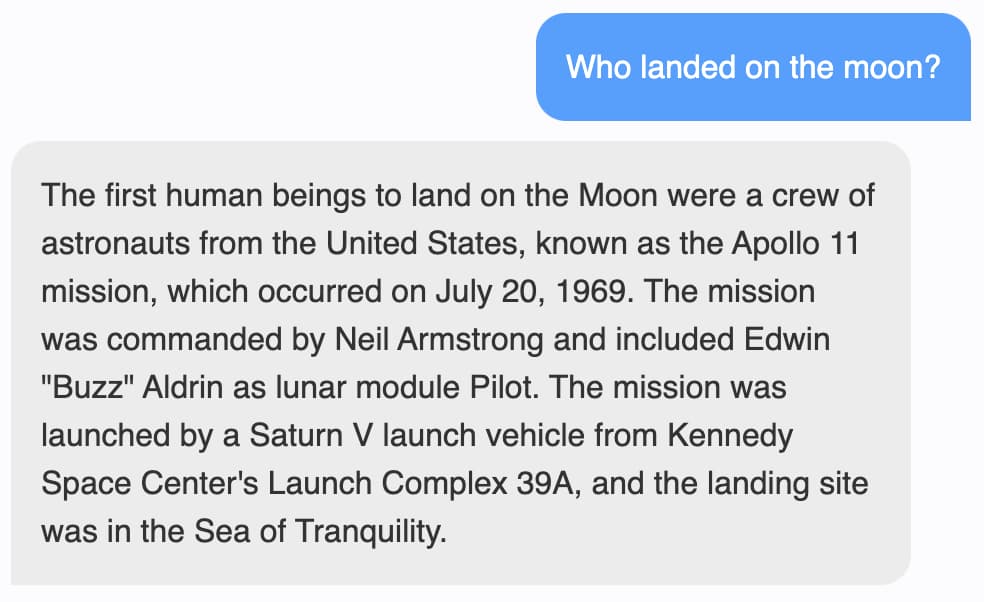

Collecting RLHF data - Argilla 1.26 documentation

RedPajama-Data-v2: An open dataset with 30 trillion tokens for

Red Pajama 2: The Public Dataset With a Whopping 30 Trillion Tokens

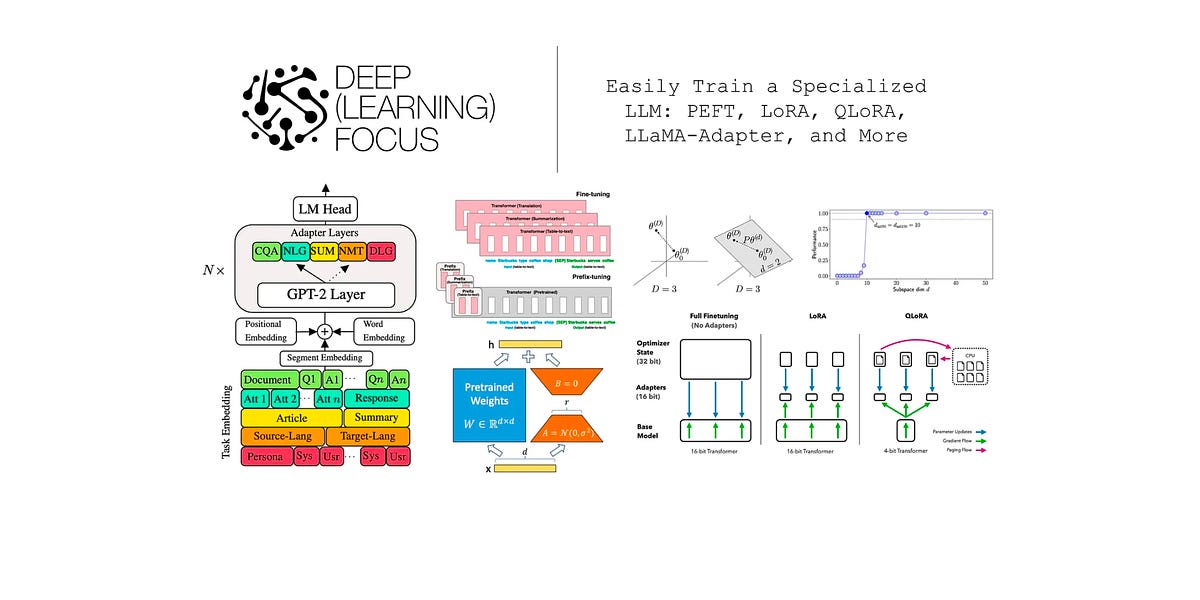

Easily Train a Specialized LLM: PEFT, LoRA, QLoRA, LLaMA-Adapter

Finetuning an LLM: RLHF and alternatives (Part I)

Web LLM runs the vicuna-7b Large Language Model entirely in your